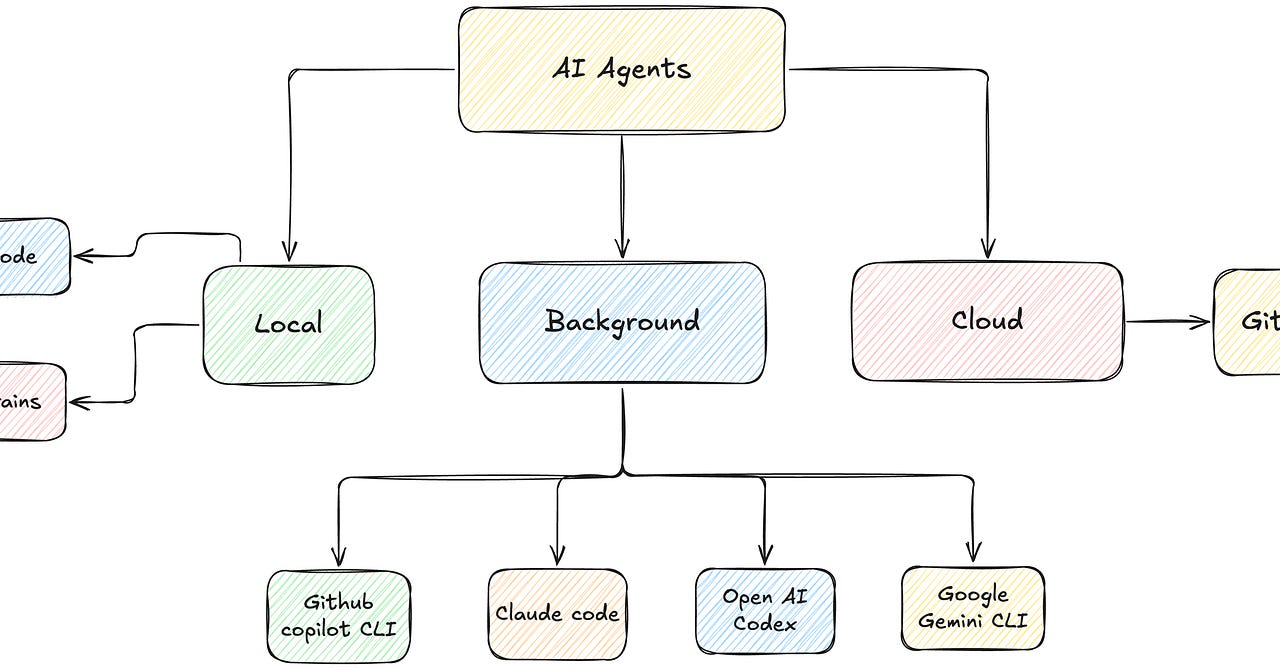

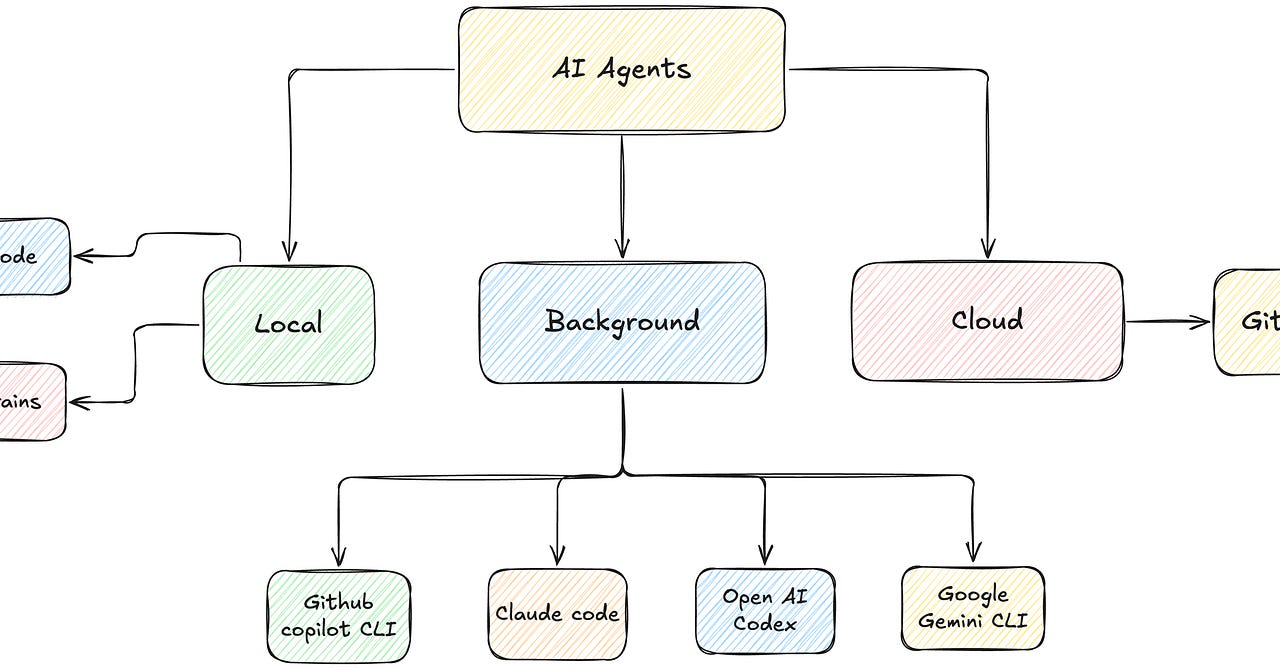

Unpacking Agent skills and AI Coding Agents on CLI

We unpack what AI Coding Agents on CLI look like with GHC CLI and dive deeper into Agent skills and how to create an Agent

READ THE LATEST

automation hacks

Helping you elevate ⚡️ your software testing and automation.

Recommendations

Alex Xu

Kent Beck

Julie Zhuo

Gergely Orosz

Substack is the home for great culture